AI Governance Frameworks: What Enterprise Leaders Must Know in 2026

December 7, 2025

Artificial Intelligence

Companies have invested heavily in AI development, but only 35% have AI governance frameworks in place. AI-driven cyberattacks pose a serious threat, with a 300% surge between 2020 and 2023. Organizations without proper safeguards face substantial risks.

McKinsey's Technology Trends Outlook reveals a worrying trend in AI company trust ratings, which dropped from 61% in 2019 to 53% in 2026. The situation looks even more concerning when you consider that 95% of executives have dealt with at least one AI-related problem in their enterprise. These numbers make it clear - AI governance frameworks are no longer optional for responsible business leaders.

Enterprise AI governance faces new challenges as we approach 2026. Half of all governments worldwide will implement responsible AI regulations by 2027. Public concern remains high, with 68% of Americans worried about unethical AI use in decision-making. The good news? Companies that maintain strong AI governance frameworks enjoy 30% higher consumer trust ratings.

This piece offers a ground-level look at everything business leaders need to know about AI governance frameworks. You'll learn about core principles, implementation strategies, and ways to manage AI throughout its lifecycle. We'll cover global standards, risk management approaches, and practical steps to build compliant, ethical, and trustworthy AI systems.

Understanding AI Governance in the Enterprise Context

Image Source: SlideBazaar

Understanding AI Governance in the Enterprise Context

What is AI governance and why it matters in 2026

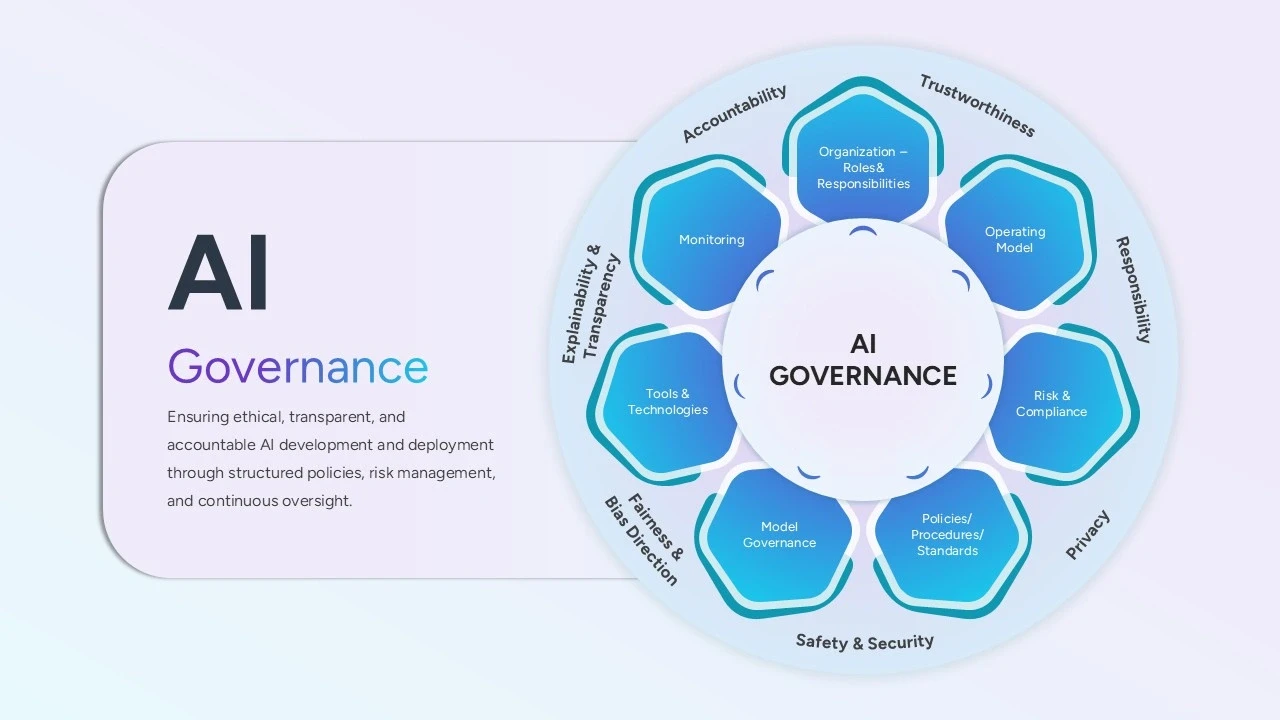

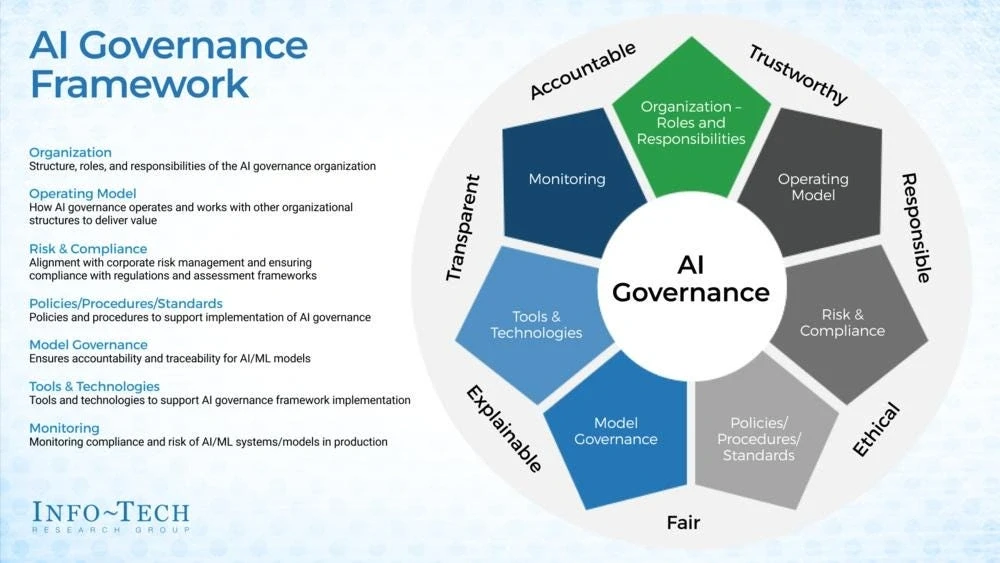

AI governance covers the policies, guidelines, and oversight frameworks that help organizations develop, deploy, and use AI safely, ethically, and responsibly. The year 2026 has made this framework crucial—88% of organizations use AI in at least one business function, but governance hasn't kept up. Only 39% of Fortune 100 companies reveal any form of board oversight of AI. This creates a dangerous gap between adoption and responsible management.

Leaders can't ignore the facts—62% express deep concern about AI compliance. They recognize what's at stake. Companies with digitally and AI-savvy boards perform better than their peers by 10.9 percentage points in return on equity. This shows the real business value of proper governance.

Enterprise AI governance vs traditional IT governance

Enterprise AI governance builds on existing data and IT governance practices. However, it tackles unique challenges that traditional approaches don't deal very well with. AI systems work differently from conventional IT systems, and that's the key difference.

Traditional IT systems run predetermined functions with predictable outcomes. AI systems learn from data patterns and produce different outputs based on their training data and model setup. This behavior needs governance frameworks that can adapt to changing model performance and handle ethical implications of automated decision-making.

AI systems often work like "black boxes" where the reasoning behind specific outputs stays unclear. Traditional systems provide clear audit trails. This lack of transparency needs specialized governance approaches that focus on explainability and accountability.

The role of leadership in responsible AI governance

AI governance starts at the top—it's a CEO-level priority. A 2023 survey revealed that companies whose CEOs actively participate in responsible AI initiatives gain 58% more business benefits than those with uninvolved leaders.

CEOs and their senior leadership team must create the right tone and culture. They need to send a clear message about using AI responsibly and ethically. This means setting up a committee of senior leaders to oversee development and implementation of governance programs.

The best approach treats AI governance as a team effort. Legal, compliance, IT, data science, security, and executive leadership work together to arrange AI decisions with business values and ethical principles.

Core Principles of AI Governance Frameworks

Image Source: Accelirate

Core Principles of AI Governance Frameworks

AI governance frameworks need fundamental principles to tackle the unique challenges of artificial intelligence technologies. Enterprise leaders must understand these basic building blocks to develop their governance strategies.

Human oversight and explainability in AI systems

Meaningful human control over AI systems is crucial. The EU AI Act demands that high-risk AI systems must include proper human-machine interfaces for effective oversight. Humans need to monitor, step in, and override AI decisions to prevent risks to health, safety, and fundamental rights. Explainable AI (XAI) techniques help stakeholders understand how AI makes decisions. AI remains a "black box" without this transparency, which damages trust and accountability.

Fairness, non-discrimination, and bias mitigation

AI systems should give equal benefits to everyone while preventing bias and discrimination. Teams need to spot different types of bias: contextual bias happens when models work differently in various settings, labeling bias comes from human assumptions that reinforce inequities, and proxy variables can lead to discrimination indirectly. Organizations should use both preventive tools like standardized documentation and risk assessments, along with detective methods such as thorough testing.

Privacy and data protection compliance

Privacy protection has become vital as AI systems process huge amounts of sensitive information. GDPR principles require organizations to collect data for specific, lawful purposes. Data must be deleted once it serves its purpose.

Accountability and traceability in AI decision-making

Someone must take responsibility for what AI systems do. Organizations need detailed audit trails that document inputs, outputs, model behavior, and decision logic. A clear record of data and decision-making processes includes three main parts: data lineage, model lineage, and decision lineage.

Security and resilience in AI model deployment

AI models create security risks beyond regular cybersecurity concerns. These models can run code that might let attackers take over cloud systems, steal data, or launch ransomware attacks. AI-specific security measures must include flexible controls, constant monitoring, and security checks that match the teams and environments using the models.

Global and Industry AI Governance Standards

AI's growing influence has led to the creation of several international frameworks that guide responsible development and use.

EU AI Act: Risk-based classification and compliance

The EU AI Act takes effect February 2026 and groups AI systems into four risk categories. The act completely bans unacceptable risk systems that include harmful manipulation, social scoring, and emotion recognition in workplaces. Systems deemed high-risk must meet strict requirements like risk assessment, high-quality datasets, detailed documentation, and human oversight. Systems with transparency risks need specific disclosures - chatbots must tell humans they're talking to machines.

NIST AI Risk Management Framework (AI RMF)

NIST released the AI RMF in 2023 with four core functions: Govern, Map, Measure, and Manage. The framework promotes continuous improvement and stakeholder involvement while assessing risks through a socio-technical lens.

ISO/IEC 42001:2023 for AI Management Systems

This innovative standard defines requirements for creating an Artificial Intelligence Management System (AIMS) in organizations. It uses Plan-Do-Check-Act methodology to tackle AI-specific challenges like ethical considerations, transparency, and continuous learning.

OECD and UNESCO ethical AI principles

OECD principles rest on five key pillars: inclusive growth, human rights respect, transparency, robustness, and accountability.

G7 Code of Conduct for foundation models

The G7 introduced this voluntary code in 2023 for advanced AI system developers. The code emphasizes security controls, content authentication mechanisms, and risk mitigation measures.

Implementing AI Governance Across the Lifecycle

Image Source: Medium

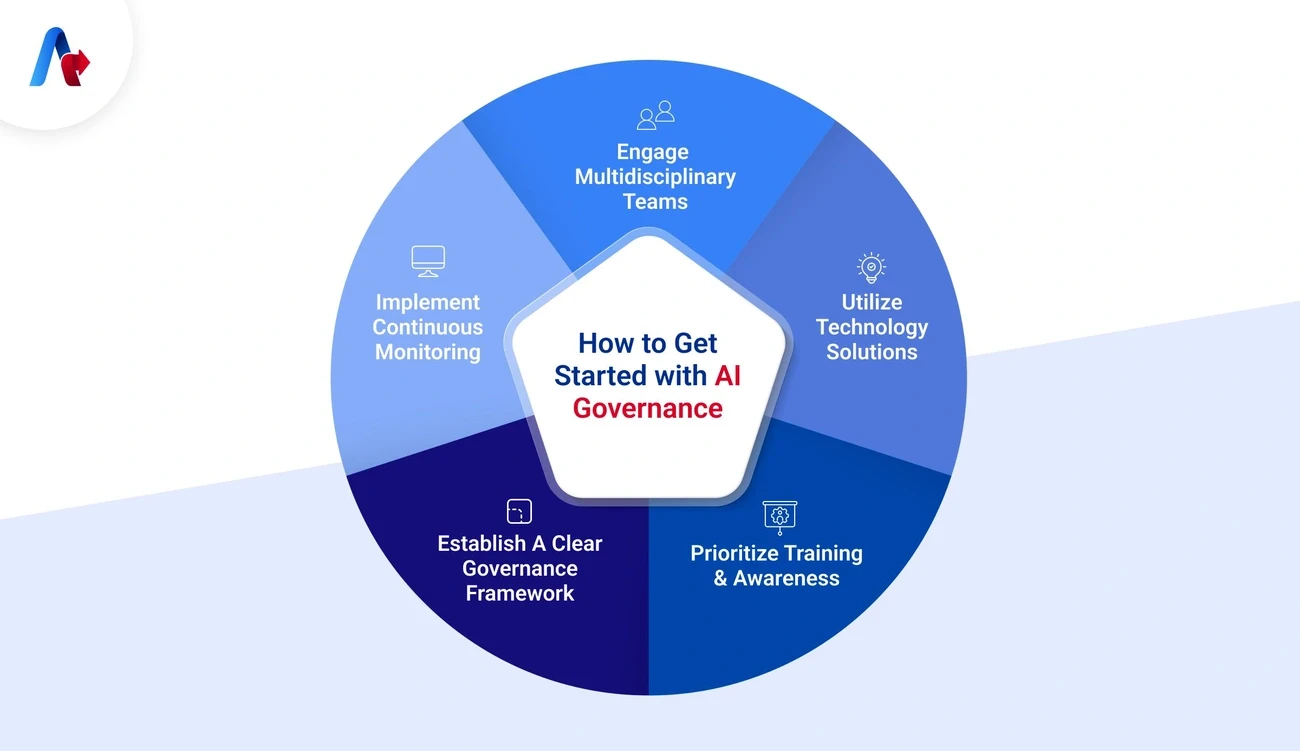

Organizations need structured oversight at every stage to implement AI governance successfully. AI systems evolve constantly and need regular refinement - they're not "set-and-forget" solutions.

Design phase: Mapping use cases and risk levels

The design phase starts with detailed use case descriptions that identify all stakeholders. Risk assessments come next. Teams should break down potential harms in different areas, including fairness and robustness. These assessments should measure risk for each stakeholder using likelihood and severity scales to help prioritize what needs fixing.

Deployment phase: Access control and audit logging

Access controls are the foundations of AI security during deployment. Teams should apply least-privilege principles so users get only the minimum access they need. Audit logging builds accountability. These logs track who used AI systems, what resources they accessed, and any policy violations.

Monitoring phase: Drift detection and retraining

Model drift happens when AI performance drops because real-world conditions don't match training data. Teams need to track several signals to monitor effectively. Performance drops of 1-3% should trigger alerts. Data distribution changes need investigation when PSI exceeds 0.1. Prediction distribution changes and error patterns also matter. Regular retraining with fresh data helps fix drift issues.

Ongoing risk management and compliance audits

Teams should set clear triggers for "drift requiring action" to manage AI risks. Written protocols for regular compliance checks are essential. The end-to-end socio-technical algorithmic audit (E2EST/AA) method looks at systems in real-world contexts, examining specific data and affected subjects.

Tools for explainability and observability

AI observability tools show what's happening at every level - from model performance to infrastructure health. The core team at https://www.kumohq.co/contact-us can guide you through setting up resilient governance tools. Key features include AI-powered anomaly detection, automated root-cause analysis, end-to-end lineage tracking, and smart alerts.

Conclusion

The AI world of 2026 brings new possibilities and major challenges for enterprise leaders. Strong governance frameworks are now the foundations of responsible AI deployment rather than optional add-ons. Leaders need to know that good AI governance goes beyond regular IT frameworks because AI systems keep learning and changing in ways that aren't always clear.

Success needs everyone's support - from the CEO down to every team member working together. Everything in your AI experience should follow key principles: human oversight, fairness, privacy protection, accountability, and security. These principles match the global standards that shape today's regulations.

Companies need a complete approach to AI governance through its entire lifecycle. This means getting a full picture of risks during design, using strong access controls when deploying, and setting up systems that watch for model drift. Companies that do this not only reduce risks but see real benefits - shown by the 30% higher trust ratings that organizations with strong governance frameworks receive.

Enterprise leaders should make AI governance strategies that fit their specific needs a top priority. Companies that don't deal very well with implementation can reach out to specialized experts at https://www.kumohq.co/contact-us to get help building governance frameworks that balance new ideas with responsibility.

Moving forward needs watchfulness, flexibility, and dedication to ethical principles. Companies that ended up thriving in the AI era will be those that build governance frameworks to use AI's power while keeping trust and following regulations. The way you handle governance now will determine your AI success tomorrow.

FAQs

Q1. What is AI governance and why is it important for businesses in 2026?

AI governance refers to the policies and frameworks that ensure responsible AI development and use. It's crucial in 2026 because of increased AI adoption, rising cyber threats, and growing regulatory pressure. Effective governance helps maintain stakeholder trust and compliance while mitigating risks associated with AI implementation.

Q2. How does AI governance differ from traditional IT governance?

AI governance addresses unique challenges posed by AI systems' dynamic and often opaque nature. Unlike traditional IT systems with predictable outcomes, AI systems learn from data and can produce varying outputs. This requires specialized approaches focusing on explainability, accountability, and ethical implications of automated decision-making.

Q3. What are the core principles of AI governance frameworks?

Key principles include human oversight and explainability, fairness and bias mitigation, privacy and data protection, accountability and traceability in decision-making, and security and resilience in AI model deployment. These principles guide the responsible development and use of AI systems.

Q4. What global standards are shaping AI governance in 2026?

Important standards include the EU AI Act, NIST AI Risk Management Framework, ISO/IEC 42001:2023 for AI Management Systems, OECD and UNESCO ethical AI principles, and the G7 Code of Conduct for foundation models. These frameworks provide guidelines for responsible AI development and use across different risk levels and applications.

Q5. How can organizations implement AI governance across the AI lifecycle?

Implementation involves mapping use cases and risk levels during design, establishing access controls and audit logging during deployment, and continuous monitoring for drift detection and retraining. Organizations should also conduct ongoing risk management, compliance audits, and utilize tools for explainability and observability throughout the AI lifecycle.